Researchers Uncover Novel Principle Explaining Brain’s Learning Process Adaptations

Researchers from the MRC Brain Network Dynamics Unit and the Department of Computer Science at Oxford University have provided this novel principle.

A New Learning Mechanism for the Human Brain

Unlike backpropagation, the study proposes that the human brain operates differently. Instead of directly adjusting synaptic connections to minimize error, the brain sets neuron activity into an optimal and balanced configuration prior to altering synaptic connections.

“Prospective Configuration”: A New Learning Principle

This new learning principle allows the brain to preserve existing knowledge, leading to faster learning and reducing potential interference.

Demonstration of Effectiveness

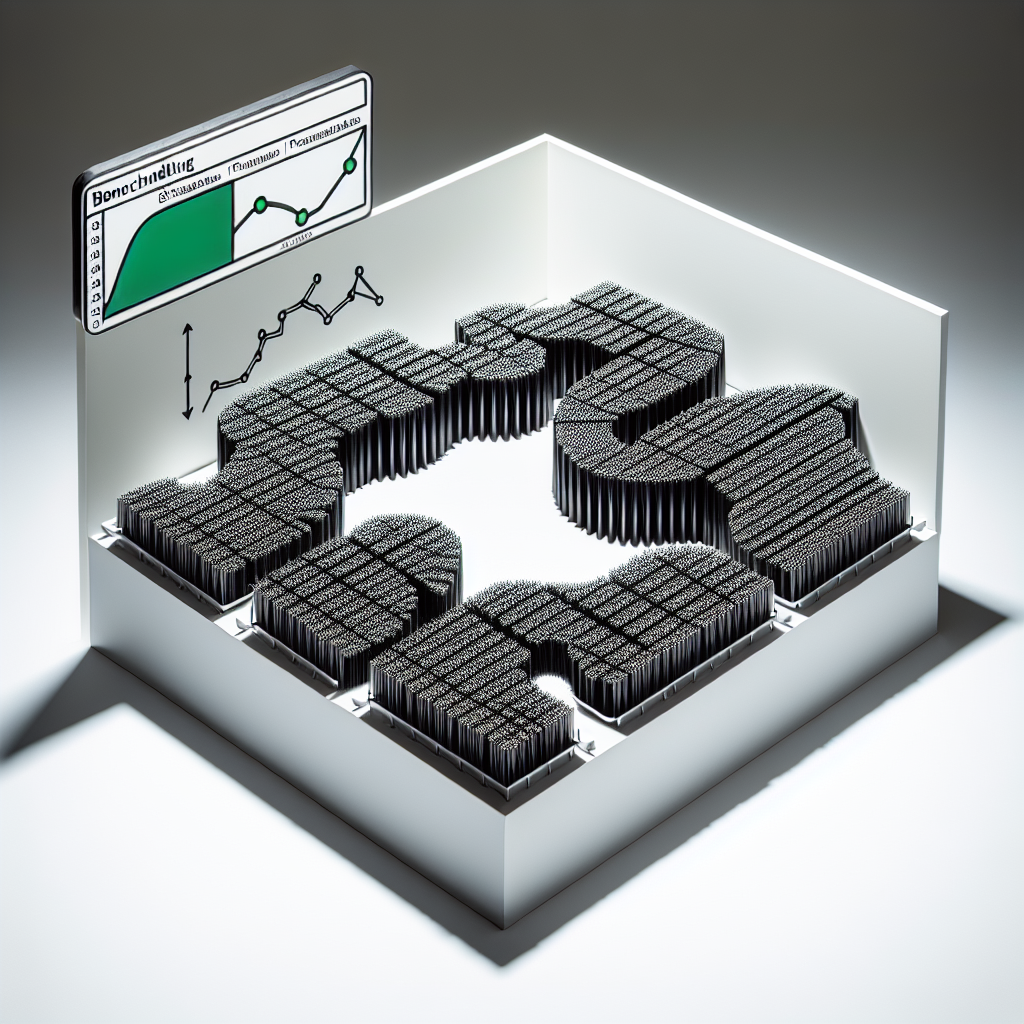

Researchers showcased the effectiveness of prospective configuration in simulations and compared it to artificial neural networks in real-life tasks.

Reduced Interference During Learning

Mathematical theory was developed to show that prospective configuration reduces interference between information during learning.

Implications for Neural Activity and Behavior

The principle better explains numerous neural activity and behavior patterns in numerous learning experiments compared to artificial neural networks.

Future Research and Applications

Professor Rafal Bogacz emphasized the need for future research in understanding the algorithm’s implementation in anatomically identified cortical networks.

Implementing Prospective Configuration

Dr. Yuhang Song, the study’s first author, suggested that implementing prospective configuration on current computers may be slow due to their differences from biological brains. A new type of computer or dedicated brain-inspired hardware could solve this issue.